LLM Experiments

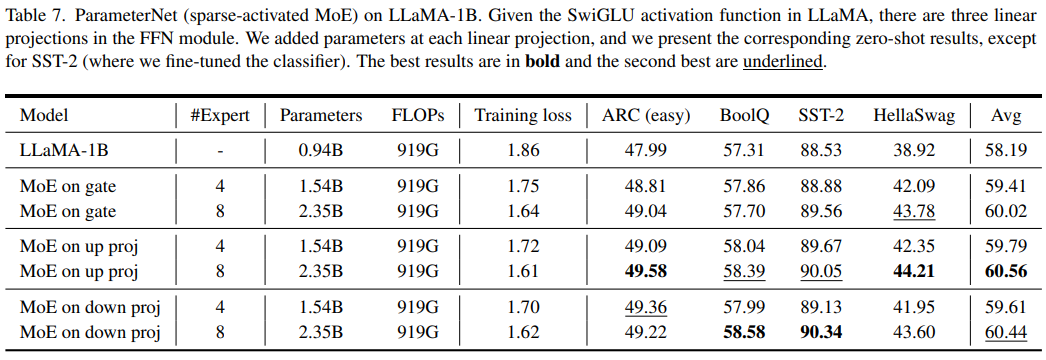

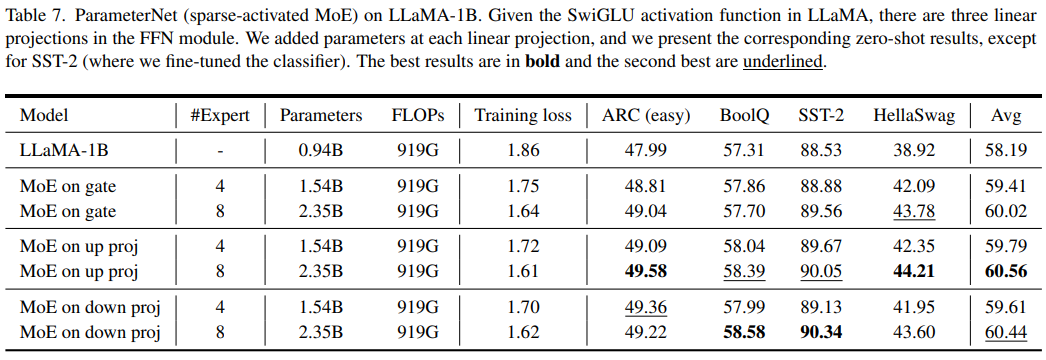

The large-scale visual pretraining has significantly improve the performance of large vision models. However, we observe the \emph{low FLOPs pitfall} that the existing low-FLOPs models cannot benefit from large-scale pretraining. In this paper, we introduce a novel design principle, termed ParameterNet, aimed at augmenting the number of parameters in large-scale visual pretraining models while minimizing the increase in FLOPs. We leverage dynamic convolutions to incorporate additional parameters into the networks with only a marginal rise in FLOPs. The ParameterNet approach allows low-FLOPs networks to take advantage of large-scale visual pretraining. Furthermore, we extend the ParameterNet concept to the language domain to enhance inference results while preserving inference speed. Experiments on the large-scale ImageNet-22K have shown the superiority of our ParameterNet scheme. For example, ParameterNet-600M can achieve higher accuracy on ImageNet than the widely-used Swin Transformer (81.6\% \emph{vs.} 80.9\%) and has much lower FLOPs (0.6G \emph{vs.} 4.5G). In the language domain, LLaMA-1B enhanced with ParameterNet achieves 2\% higher accuracy over vanilla LLaMA.

@misc{han2023parameternet,

title={ParameterNet: Parameters Are All You Need},

author={Kai Han and Yunhe Wang and Jianyuan Guo and Enhua Wu},

year={2023},

eprint={2306.14525},

archivePrefix={arXiv},

primaryClass={cs.CV}

}